Recently, I spent a good chunk of my time working on a Thrift related bug. Here’s some notes taken in the process.

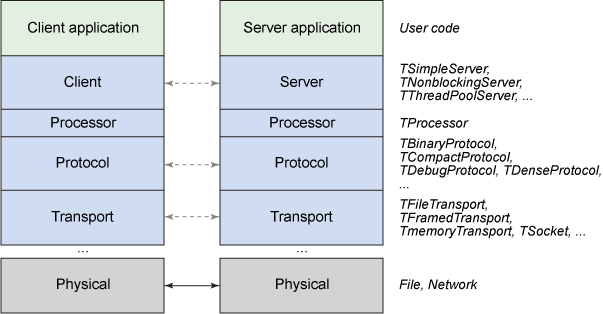

The architecture of Thrift looks like this:

fig:

First, on the server side, it starts a server instance (for instance, a TThreadPoolServer) upon initialization. After started, the server calls the serve() method, which basically keep listening incoming request from the client. When there’re a new connection from the client, it first initialize input/output transport and protocol according to the type of client, and also create a specific processor for the client. It then spawn a new worker thread (or task) to handle the connection.

The workflow starts from the client, when it calls transport.open(). Here, the transport could be different types, such as TSocket, TSaslClientTransport, etc., and depending on the type, the open method also have different behaviors. For instance, for TSocket, it simply just opens the underlying socket, and no communication between server and client happens, but for TSaslClientTransport, it will initiate a handshake process between client and server, which often consists of several rounds of message exchange.

The life cycle of a task looks like this: it begins by creating a specific and customizable event handler, which basically have 3 operations: createContext, processContext and deleteContext.

createContext: create a connection context when the connection is established.processContext: does processing on the context before each RPC event.deleteContext: happens before the connection is closed.

The task first calls the createContext to create a context for this connection. It then enters a infinite loop: each iteration of the loop begins with the processContext, followed by calling the process method, which handles the RPC. If the client closed the transport, then it exits the loop, calls deleteContext and close the transport, etc.